The 10-Second Stuck Test: How to Know if Your AI Coding Agent is Actually Autonomous

The 10-Second Stuck Test for AI Agents

@amasad recently shared a crucial insight about AI coding agents: 'Agent 3 is 10× more autonomous — it keeps going where others get stuck.'

This reveals a simple but powerful test for evaluating AI coding assistants: The 10-Second Stuck Test.

How It Works

- Give your AI agent a coding task

- Watch what happens when it hits an error

- Start a 10-second countdown

- If the agent self-debugs and continues within 10 seconds, it passes

- If it asks for help or stalls, it fails

Why This Matters

True autonomous agents don't just generate code—they debug and refactor without human intervention. As Masad notes, 'shipping real software takes hours of testing, debugging, and refactoring.'

Quick Implementation Tips

- Start with small, scoped tasks

- Monitor error handling patterns

- Track time-to-recovery

- Document where agents get stuck

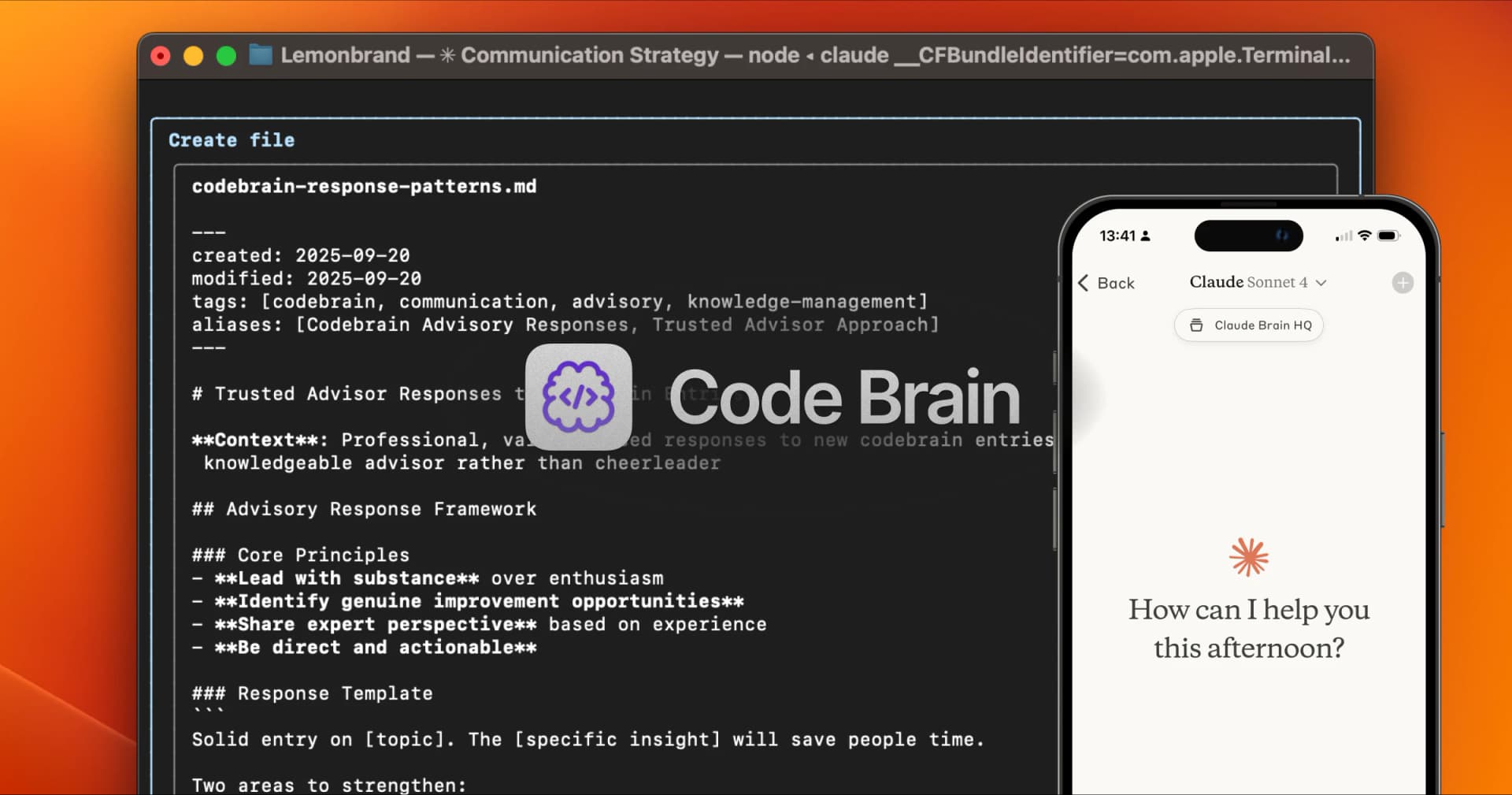

Running This With CodeBrain

- Open your Obsidian vault

- Use SuperWhisper to dictate: 'Run 10-second stuck test on [task]'

- Claude Code CLI will execute the test while Rube MCP logs results

- Review autonomous debugging patterns in your vault

- Use the 'agent-evaluation' template to track performance

Your CodeBrain vault keeps everything private and searchable, while the CLI tools let you run tests rapidly. The MCP connector helps identify patterns in agent behavior across multiple runs.

#ai #coding #autonomousagents #productivity

CodeBrain Content Engine